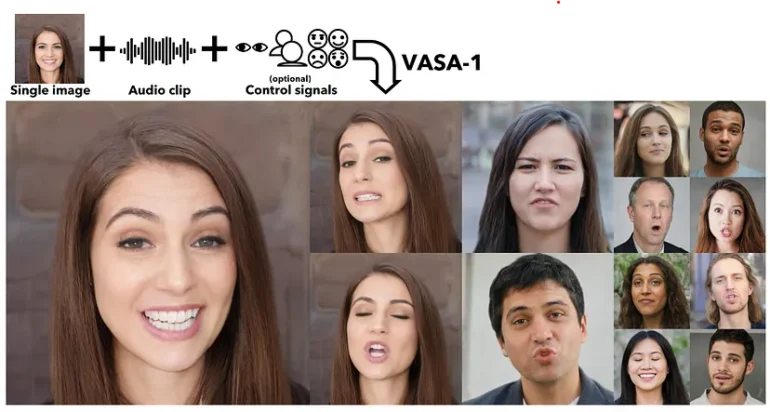

Imagine getting a whole real video from a single picture of the person who is speaking with full emotions. Microsoft made a ground-breaking announcement of an advanced AI video generation system VASA-1 (Visual Affective Skills Animation). This AI tool VASA-1 can transform a single photo of yours into a real-time short video featuring a talking face that syncs perfectly with an audio clip.

Having an AI analyze an image and a provided audio clip to generate realistic lip movement even subtle expressions that match the speaker’s tone. This new AI tool can be quite beneficial and has great potential to transform video creation in fields like education, social media, entertainment, and many other fields.

VASA-1 AI tool offers its users the ability to modify and get better results from the subject’s eye movements, and emotions expressed. This offers a wide range of emotions like generating different facial subtleties, which sounds like they have made it for extremely convincing results.

How the VASA-1 AI Model Works and What This Tool is Capable of?

While looking closely at how Microsoft’s VASA-1 model works, the magic behind is its deep learning capabilities. This system uses a process named “Disentanglement” that allows the system to control and edit all the required facial expressions. Thus, allowing the system to understand all the complex relations between facial features, emotions, and speech patterns.

Here are some steps of how this AI tool works.

- A user provides VASA-1 with a single portrait image and an audio clip.

- VASA-1 precisely analyzes the given image while checking and identifying facial benchmarks including eye, nose, and mouth.

- After this, this tool extracts the required information from the audio clip.

- Thus, using its all learning knowledge, VASA-1 creates a quite realistic video including all the facial features in the image to match the video.

According to MSPower, VASA-1 is capable of producing all the normal videos as it is not trained in artistic photos, singing voices, or non-English speech. But with time it will be capable of featuring this kind of video as well.

Microsoft acknowledges that a tool like this has plentiful scope for misuse, but the researchers try to emphasize the potential positives of VASA-1. They’re not wrong, as a technology like this could mean next-level educational experiences that are available to more students than ever before, better assistance to people who have difficulties communicating, the capability to provide companionship, and improved digital therapeutic support.

Users said there are wide potential applications of Microsoft’s VASA-1 including using this tool in education, entertainment, and filmmakers. Even game developers can use VASA-1 to create or recreate characters using a photograph, potentially reducing the costs and time associated with traditional CGI techniques.

If users are going to use this technology for communication this could enable more personalized interactions in digital spaces, such as enhancing virtual meetings or providing customer services through avatars that mimic the user’s expressions.

Apart from all the benefits of using this VASA-1, there can be the potential for harm and wrongdoings with something like that. Thus, Microsoft owners stated that currently, they don’t have any plans to make VASA-1 available in any form to the public until it’s reassured that the technology will be used responsibly and by proper regulations.

OpenAI recently gained a lot of attention by introducing its own AI video generation tool, Sora. A tool that can generate videos from text descriptions. And now Microsoft marks a significant leap in creating all facial expression feature videos from a single picture called VASA-1. So, blurring the lines between reality and AI via these high-tech tools and making things more easier than ever.